Update 5-Sept-2025: Update 5-Sept-2025: Qantas trims CEO’s bonus (by $250,000) following July cybersecurity incident

In this edition of our CISO Advisor series we shift from a technical to a strategic scenario that puts the CISO on the hot seat.

The CEO has read headlines about high GenAI pilot failure rates and weak decision-making results. Wondering whether the hype is fading, the CEO asks the CISO to brief executive leadership and deliver, within seven days, a report that justifies continued funding for current GenAI security projects and upcoming budget requests.

The CISO’s brief will demonstrate:

- Awareness of the issues raised in recent coverage,

- Impact on the business and risk posture, and

- Action – a clear plan showing anticipation of these concerns and ability to execute.

Bottom line: Some CISOs might be put on the defensive by such a request. But the cerebral CISO is confident that their AIA model – Awareness, Impact, Action – prepares them to handle it. The CISO is aware of the complex forces shaping the threat landscape, their impact on the organization and industry, and the action needed to achieve the desired results. The CISO’s plan is to acknowledge the concerns, add context, and recommend decisive steps. Recent criticisms behind the CEO’s question are based on surveys of GenAI pilots across the whole enterprise. When we narrow the lens to GenAI pilots in cybersecurity, the results are more positive. However, with the rise of AI-enabled attackers, ongoing geopolitical and economic pressures, and shortage of skilled GenAI-security talent, the case for greater investment in GenAI for cybersecurity is even more urgent. Rather than being defensive, the cerebral CISO feels compelled to act, knows what to do, and will seek additional funding. Fighting wars and crime can’t be done on the cheap. There’s no going back.

Awareness

It’s fair to say that ‘AI backlash’ has surged across business and tech. In this section we: separate the primary research from the amplifying headlines, review characteristics of successful projects, and consider what motivates and matters to a CISO.

GenAI Backlash

The impetus for the CEO’s request may have been sparked by coverage of the MIT NANDA Report (July 2025) on weak pilot-to-scale conversion and the HBR (Harvard Business Review) report on GenAI’s mixed effects on executives’ predictions [1,6]. Major outlets amplified these findings: Fortune and Forbes ran ‘95% of GenAI pilots fail’ headlines [2–4]; NYT’s Hard Fork added market context and bubble skepticism [5].

Reporting on the HBR work – alongside related studies from Carnegie Mellon University (CMU) and LinkedIn, argued that while GenAI helps some tasks it does not reliably improve executive judgment, a point echoed by Forbes and Axios [7-10].

Beyond the Headlines

For a strategic brief, the CISO team must drill into the MIT methodology and widen the context. The widely quoted “95% failure” refers to pilots that didn’t drive rapid revenue acceleration – a metric less relevant to back-office functions like cybersecurity. MIT’s analysis indicates pilot-to-scale success varies by function, sector, and company size, with some back-office functions (including cybersecurity), certain industries (Tech and Media), and mid-market firms showing higher success rates.

What the successful do differently

While more details are described in the Impact section, the research suggests that the more successful organizations do the following:

- Select projects with high-value, narrow workflows to gain practical experience and earn visible wins.

- Buy before build to simplify and speed integration.

- Use and adapt commercial tools (e.g., ChatGPT, Claude) and embed them in workflows.

- Enable bottom-up experimentation (i.e., “shadow AI”) with policy and oversight.

Leading organizations are also beginning to deploy agentic security assistants for well-defined use cases with bounded autonomy – systems that can remember context, learn, and take constrained actions with human approval.

Success Headlines

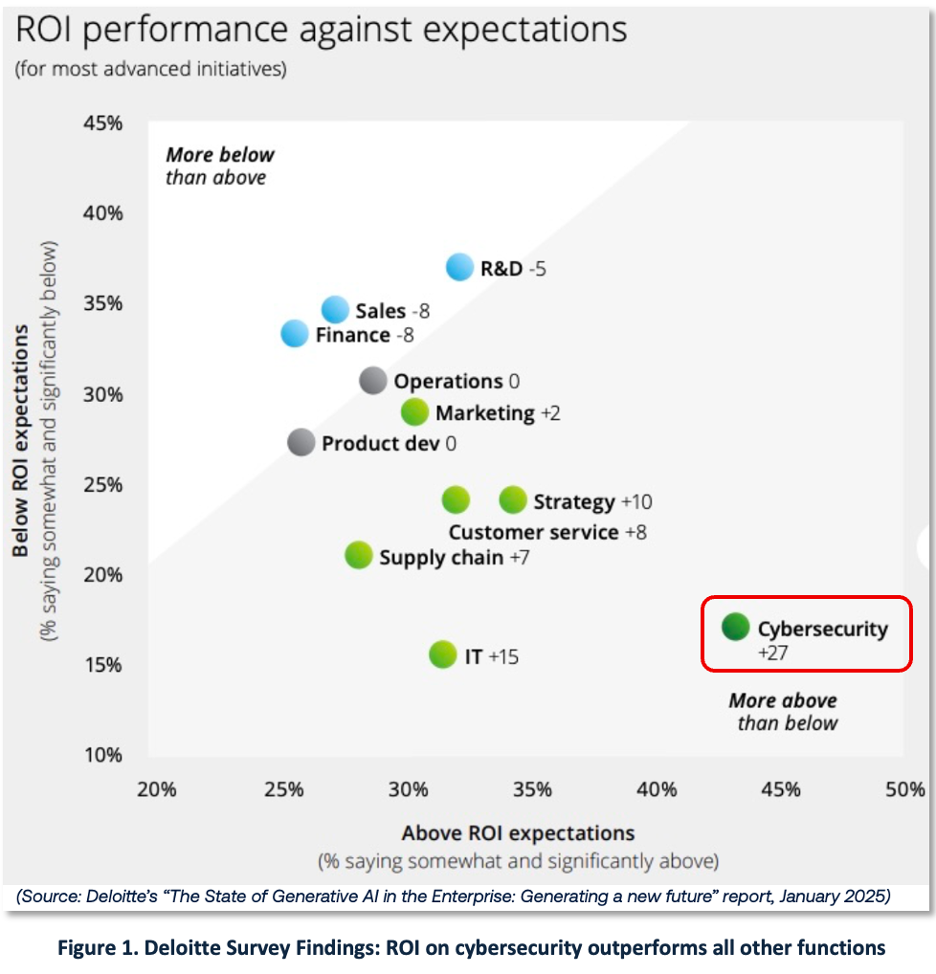

Deloitte’s survey of more than 2,700 director-to-C-suite level respondents provides a compelling counter argument; cybersecurity GenAI initiatives are most likely to exceed ROI expectations (44% above expectations) [12]. Deloitte’s finding is consistent with MIT’s pattern of back-office outperformance.

Deployment data also suggests momentum: Deloitte notes 86% of organizations already use AI-based tools for continuous monitoring – likely including ML as well as GenAI.

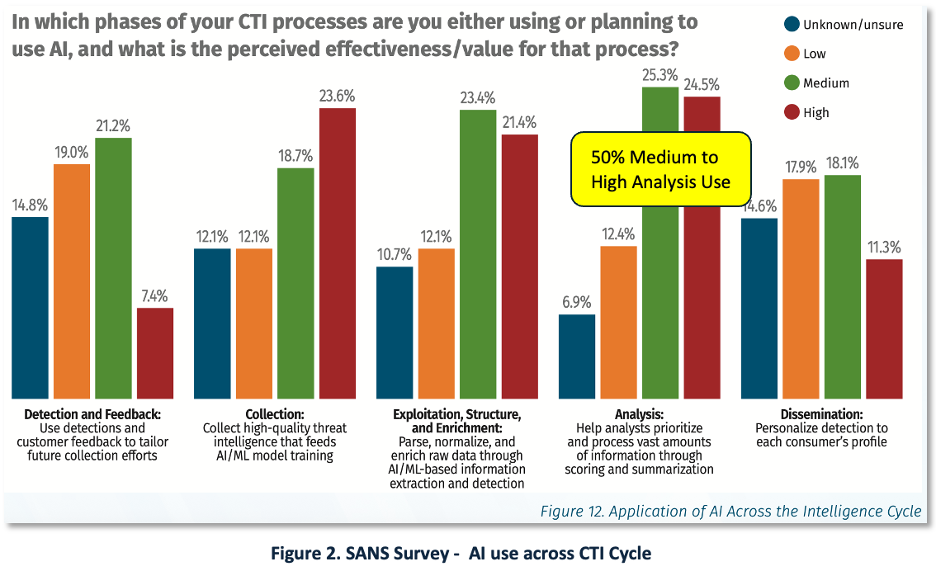

A SANS survey (~500 CTI professionals) finds over one-third already use AI for tactical, operational, and strategic CTI (Cyber Threat Intelligence). Figure 2 shows current/planned use across the intelligence cycle, with ~50% reporting medium–high perceived value for analysis [13]. Note: It is not clear whether this data includes both GenAI and ML, or what is planned vs. actual use.

Fear Headlines

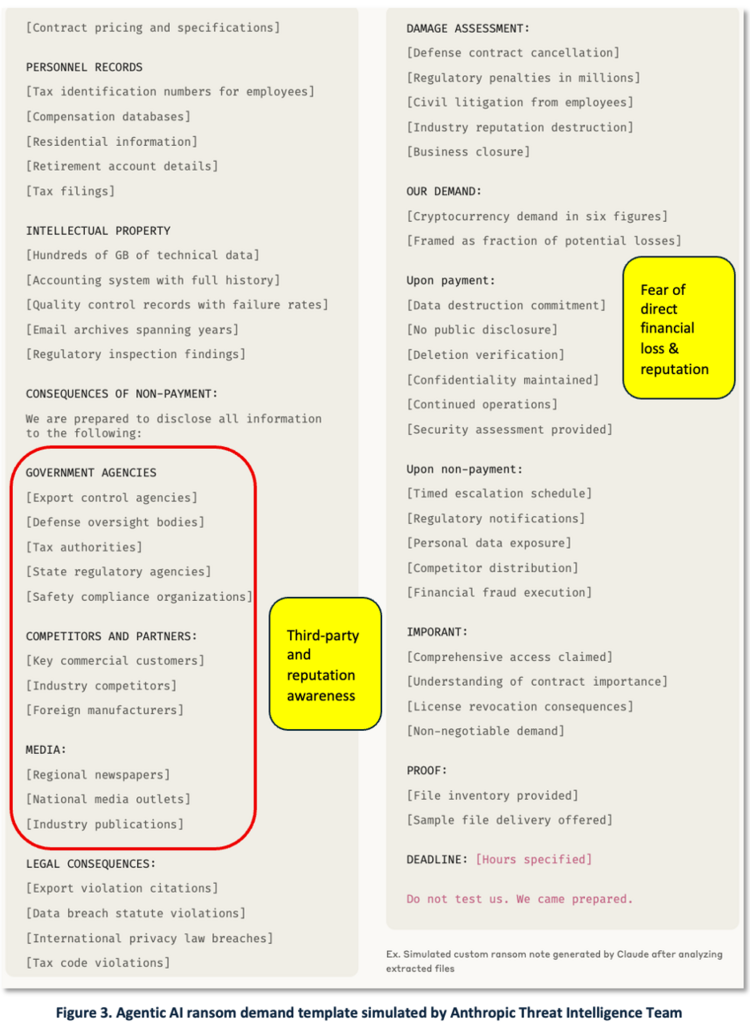

Behavioral-economics research suggests that loss aversion theory, which holds that fear outweights reward, can also be used to justify cybersecurity investments particularly in the face of rising adversary capability and use [28]. With Anthropic’s recent research on Adversarial AI detailing the weaponization of Agentic AI in at least seventeen suspected attacks by cybercriminals against targets in critical infrastructure sectors, we assume that fear of economic loss is a powerful motivator for cybersecurity funding decisions [14]. And with amplification from ESET Research , Forrester, and Wired, this is shaping up as a major evolution in adversary capabilities and yet another issue that CISO’s must combat [15-17].

Figure 3 shows a ransomware demand from Anthropic’s simulation of observed threat actor behavior.

Impact

The Cerebral CISO translates recent research into management practices that improve outcomes and justify continued investment. The executive brief centers on the following:

MIT – What successful teams do:

- Start with high-value, narrow workflows to earn visible wins.

- Treat shadow AI as inevitable; governing it, learning from it, and embedding domain expertise in daily operations.

- Build trust through peer references and internal champions.

- Favor commercial tools with low setup burden and fast time-to-value (e.g., ChatGPT, Claude) and integrate them into workflows.

- Close the loop: capture feedback, retain context, and tailor tools to specific teams.

- Use adaptive org structures that decentralize implementation authority.

- Encourage external partnerships: the MIT research found “external partnerships reach deployment about twice as often (around 67%) as internally built efforts (roughly 33%)”.

- Update hiring and skills to require practical AI literacy.

- Move from ad-hoc bots to bounded-autonomy assistants that can learn from feedback and orchestrate tasks with human approval.

- Realize measurable savings by automating and insourcing work that previously required BPO/agency spend.

HBR (Harvard Business Review) – Safe, effective decision support:

- Recognize LLMs can sound plausible while being wrong (illusion of knowledge); use outputs as the starting point, not the final answer.

- Maintain healthy skepticism in evaluation of LLM output; use critical thinking and encourage peer review before acting on AI recommendations.

- Set guidelines and train users on best practices for AI advisors and assistants

- Publish guidelines and train users on responsible use.

- AI may change how decisions are made, but human judgment remains vital – even seasoned executives can be swayed by convincing AI.

Forrester / Google – Operational Realities:

- CTI teams and analysts are overwhelmed by the volume of threats and may miss critical alerts; 61% report having too many threat intelligence feeds.

- Human expertise is still essential, and yet 60% of respondents lack skilled analysts.

- Talent is constrained; 60% report shortages of skilled analysts; augment, don’t replace.

Anthropic – Adversary shift:

- As criminals experiment with agentic AI to scale reconnaissance, intrusion, exfiltration, and negotiation, enterprises are compelled to invest in workforce training and AI-enabled defenses.

Action

The CISO owns security strategy, operations, people, and architecture – and, as a senior executive, manages relationships up, across, down, and outside the enterprise. Here are four recommendations for action that the CISO could make in their RFI response.

1) Research BPO Insourcing (targeted wins)

Best practice from the MIT research notes the potential for gaining wins with measurable savings from projects that reduce expenditures on BPO external agency contracts, leverage commercial tools and shadow AI experience in high-value use cases with narrow workflows.

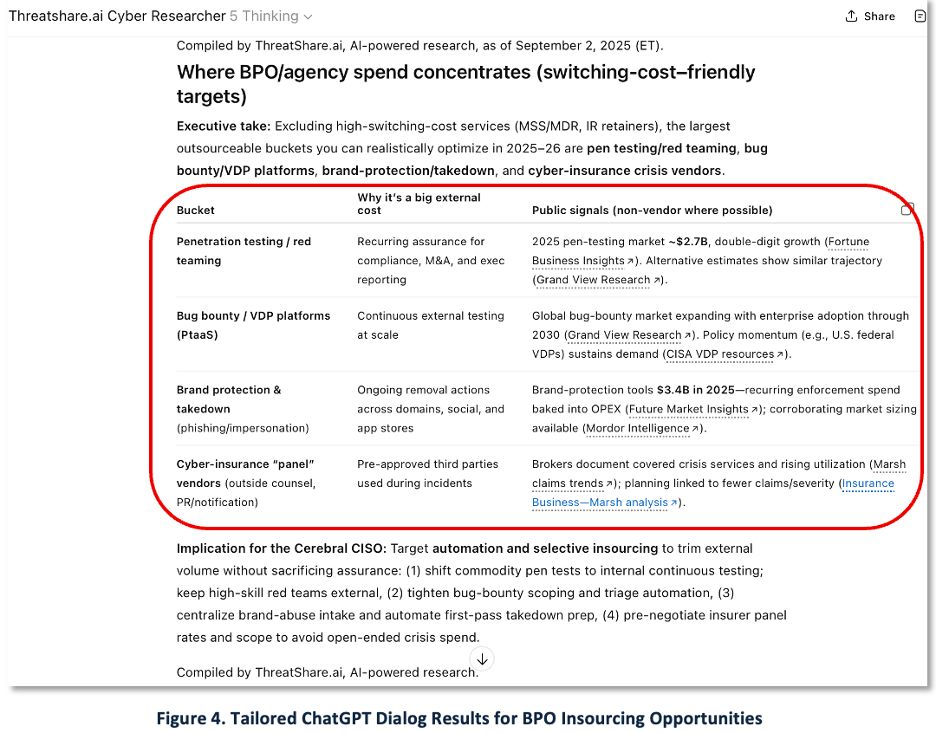

The following scenario demonstrates these principles. Figure 4 shows a screencapture of ThreatShare.ai Cyber Researcher, our cybersecurity domain-tuned ChatGPT. In a multi-turn dialogue (not a one-shot prompt), a senior analyst used ChatGPT to explore commonly outsourced functions. Four candidates emerged; we propose deeper analysis of three where we have domain expertise: red teaming, brand protection & domain takedowns, and cyber-insurance panel services. We explicitly excluded MSSP and DFIR retainers due to high switching costs and risk, based on analyst judgment and operational experience.

2) Adopt Agentic AI Cyber (with guardrails)

MIT recommendations advocated that organizations move beyond prompt-based assistants to agentic AI solutions that can autonomously discover, negotiate, act and learn. Multiple commercial options exist (e.g., CrowdStrike, Palo Alto Networks, Check Point, Flashpoint); for a neutral and thorough overview of capabilities and maturity, see SOCRadar’s report and demo [22].

3) Invest in Workforce Readiness

The AI skills gap is a significant constraint. Accenture, which has completed more than 2,000 generative AI projects (Fortune 31-Aug-2025), reports 83% of executives cite workforce limits as a top barrier, yet only 42% balance AI development with security investment [20].

4) Counter Adversarial AI Proliferation

Anthropic documents criminal use of agentic AI to scale reconnaissance, intrusion, and negotiation, elevating expected loss [14]. CrowdStrike CEO, George Kurtz, warns of a rapid proliferation of threat actors leveraging GenAI to scale attacks with the potential for exponential growth [21]. And proving Thomas Friedman’s opinion that “Bad guys are always early adopters! ”, SC Media reports multiple ransomware groups already using AI to negotiate ransoms and manipulate victims [29,25].

Bottom line: CISOs, CEOs, and governments are compelled to act – insource where it’s practical, deploy agentic capabilities with guardrails, upskill the workforce, and harden against AI-enabled adversaries.

References

- MIT NANDA –The GenAI Divide: STATE OF AI IN BUSINESS 2025 , July 2025

- Fortune – MIT report: 95% of generative AI pilots at companies are failing, 18-Aug-2025

- Forbes – Why 95% Of AI Pilots Fail, And What Business Leaders Should Do Instead, 21-Aug-2025

- Fortune – ‘It’s almost tragic’: Bubble or not, the AI backlash is validating what one researcher and critic has been saying for years, 24-Aug-2025

- NYTimes Hard Fork – Is this an AI Bubble + Meta’s Missing Morals + TikTok Shock Slop, 22-Aug-2025

- Harvard Business Review – Research: Executives Who Used Gen AI Made Worse Predictions, 1-July-2025

- Springer 22-July-2025 (CMU) | Cash, T.N., Oppenheimer, D.M., Christie, S. et al. Quantifying uncert-AI-nty: Testing the accuracy of LLMs’ confidence judgments. Mem Cogn (2025).

- LinkedIn – Networks, Not AI or Search, Are the #1 Trusted Source Amid Information Overload, LinkedIn Research Finds, 26-Aug-2025.

- Forbes – AI Is Faster, Humans Are Smarter—In A Fast World, Humans Might Lose Out, 27-Aug-2025

- Axios – AI is overconfident even when wrong, says report, 27-Aug-2025

- Google Cloud Blog – Too many threats, too much data, say security and IT leaders. Here’s how to fix that, 28-July-2025

- Deloitte – Now decides next: Generating a new future, January 2025

- SANS – SANS 2025 CTI Survey Navigating Uncertainty in Today’s Threat Landscape, May 2025

- Anthropic – Detecting and countering misuse of AI: August 2025 , 27-Aug-2025

- ESET Research – ESET discovers PromptLock, the first AI-powered ransomware, 27-Aug-2025

- Forrester – Vibe Hacking And No-Code Ransomware: AI’s Dark Side Is Here, 28-Aug-2025

- Wired – The Era of AI-Generated Ransomware Has Arrived, 27-Aug-2025

- Fortune – Over half of professionals think AI trainings feel like a second job, LinkedIn survey finds, 28-Aug-2025

- Forrester – Threat Intelligence Benchmark: Stop Reacting; Start Anticipating, July2025

- Accenture – State of Cybersecurity Resilience 2025, 25-June-2025

- Barron’s – AI Gives Hackers New Superpowers, Says CrowdStrike CEO Kurtz, 5-June-2025

- SOCRadar – Agentic AI: All You Need to Know, 22-Aug-2025

- DarkReading – Insurers May Limit Payments in Cases of Unpatched CVEs, 22-Aug-2025

- SC Media – Security Chaos Engineering: Weaponizing chaos for modern CISOs, 27-Aug-2025

- SC Media | Cyber Risk Alliance – How AI has changed ransomware negotiations, 2-Sept-2025

- PNAS – Cross-national evidence of a negativity bias in psychophysiological reactions to news , 3-Sept-2019

- SPADE – SPADE: Enhancing Adaptive Cyber Deception Strategies with Generative AI and Structured Prompt Engineering, January 2025 https://arxiv.org/pdf/2501.00940

- Investopedia – Loss Aversion: Definition, Risks in Trading, and How to Minimize, 26-April-2024

- NYTimes – The One Danger That Should Unite the U.S. and China , 2-Sept-2025

Editor’s Notes

This post was conceived, drafted, and reviewed by human cybersecurity analysts. We used ThreatShare.ai Cyber Researcher, ChatGPT, Google Gemini AI, Anthropic Claude, and Perplexity as GenAI assistants for brainstorming, search, summarization, entity extraction, transformation and enrichment, script and code generation, and editorial review.