This post is written for CISOs (Chief Information Security Officers) and cybersecurity teams in large enterprises within critical infrastructure sectors. It is informed by recent research, reporting, and hands-on experience with Generative AI (GenAI), focusing on four key themes:

- The importance of critical thinking in the use of AI—and the effects of AI on critical thinking

- The impact of AI on cybersecurity workforce roles, skills, and training

- Practical use-cases relevant to CISO-led security teams

- The current and future outlook for GenAI adoption in enterprise cybersecurity

But before diving in, let’s take a moment to reflect.

Over the past two and a half years, I’ve created thousands of prompts and system prompts related to cybersecurity across multiple LLMs (Large Language Models) and chatbot interfaces. This practical engagement has reinforced just how essential critical thinking is — particularly when it comes to prompt development, refinement, and operational management.

I had originally planned to publish this post on April 11. But as often happens in rapidly evolving and hype-driven fields like GenAI for cybersecurity, a flood of content — some useful, much of it not — outpaced my ability to manage it. The realization that I had fallen into an overthinking trap turned into a valuable reminder: thoughtful prompting and informed judgment are more necessary than ever.

With that reflection in mind, here is the bottom-line perspective that shapes this post:

- Despite the hype, technical immaturity, and well-known limitations, GenAI and AI Agents remain the most viable tools currently available to help CISOs address skill shortages and defend against increasingly complex cyber threats.

- While enterprise adoption of GenAI in cybersecurity is underway, most deployments are still early-stage pilots or narrowly scoped experiments.

- Building critical thinking into GenAI prompt engineering and knowledge elicitation — through phased consulting engagements and training — is a practical and scalable strategy for enabling continuous learning and supporting long-term digital transformation.

Critical Thinking and AI: Definition, Requirements, Effects

In this section, we:

- Define critical thinking in the context of GenAI and cybersecurity

- Explore the importance of soft skills linked to critical thinking

- Review recent research on how GenAI impacts cognitive behavior

Definition: Critical Thinking is a complex and ambiguous concept with no single definition. Drawing on our experience, and with help from Wikipedia, MIT Horizon [1], and our ensemble of Chatbots (from OpenAI, Perplexity, Google Gemini, Anthropic), we define critical thinking for GenAI in cybersecurity as follows: Critical thinking is a reflective, self-directed, analytical, and learning-oriented process used to evaluate observations, arguments, and evidence in to order to reach sound conclusions. It involves recognizing assumptions, applying comparative analysis, and drawing on professional skepticism, domain expertise (e.g., cybersecurity), sector knowledge, institutional context, and tacit knowledge.

‘Soft skills are the new hard skills’: [9] As cybersecurity teams adopt GenAI, critical thinking is becoming one of the most valuable and scarce skills. George Lee, co-head of applied innovation at Goldman Sachs, argues that AI puts a premium on cognitive skills like critical thinking, logic, rhetoric, and creativity—traditionally considered “soft” and often associated with liberal arts backgrounds [2].

Deloitte similarly advises that CISOs should encourage critical thinking to mitigate GenAI-related risks. They also emphasize empathy as a core capability for cybersecurity teams supporting business stakeholders [3].

Google Gemini’s AI Overview offers a helpful framework for understanding the interplay between soft skills and critical thinking — illustrated in Figure 1.

Prompt engineering requires creative thinking skills: Effective prompt development demands soft skills that go far beyond technical command. Based on practical experience, the following are particularly important:

- Empathy and Creativity: Prompting is an iterative dialogue between the user and the AI. Success depends on the developer’s ability to empathize with the end user — not just understanding tasks and information needs, but also motivation and context. Creativity and curiosity are vital for crafting questions that explore “why,” “who,” “when,” and “how.” Prompt developers must also understand how GenAI systems operate in order to offset their inherent limitations.

- Patience and Perseverance: While GenAI can automate high-volume tasks, it is only as effective as the data and questions it receives. Simple but powerful prompts are often the result of disciplined, iterative dialogue over time — supported by robust system prompts and customer-centered data. Developers must also remain skeptical, as GenAI can produce errors or fail to follow instructions.

- Disciplined and Decisive: The AI is a dazzling research tool; far more powerful than search. It has excellent precision and recall. But the more it finds, the more need for human review. Users must stay focused, follow a defined process, and recognize when they’re veering into unnecessary iterations or overthinking.

Paradox – AI can also undermine creative thinking skills: There’s a paradox at play. While GenAI demands critical thinking for effective use, research also shows that it can inhibit those same skills.

Microsoft researchers [4] found that users with greater trust in GenAI tend to exercise less critical thinking, while users with stronger self-confidence remain more analytical. A Swiss study published in Societies [5] observed a significant negative correlation between frequent AI use and critical thinking capacity — especially among younger or less experienced users. The researchers describe a growing reliance on AI for tasks that would otherwise require active reasoning, a phenomenon known as cognitive offloading.

Workforce Impact

This section provides an overview of factors and trends shaping workforce attitudes, priorities, and opportunities as GenAI gains traction in large enterprises.

Expert Opinion: Expert commentary plays a powerful role in shaping market sentiment and organizational behavior — whether grounded in evidence or speculation. When Bill Gates says that AI will replace many doctors and teachers—humans won’t be needed ‘for most things’, it influences how people think about jobs, training, and careers. Likewise, when Microsoft CTO, Kevin Scott, says that ‘95% of code will be AI-generated within five years, it has a ripple effect across the tech sector. [12]

Not all leaders take such an aggressive view. Perplexity CEO Aravind Srinivas emphasizes the importance of tacit knowledge: “There are very few people who really understand even prompt engineering, making these models do what you want them to do, making it do it reliably at scale.… This requires a lot of tacit knowledge that doesn’t exist in the … wider engineering community right now.” [13]. Meta Chief AI Scientist, Yann LeCun, also takes a human-centric stance: “AI is not replacing people, it’s basically giving them power tools. … basically our relationship with future AI systems, including superintelligence, is that we’re going to be their boss.” [14]

Survey Data: Several large-scale studies provide additional insight:

The World Economic Forum’s Future of Jobs Report: 2025 based on a survey of 1,000+ global employers, notes:

- “AI and big data” top the list of fastest-growing skills in tech, followed by networks and cybersecurity.

- “Analytical thinking” remains the most in-demand core skill, cited by 70% of employers, followed by resilience, agility, and leadership. [6]

The Pew Research survey of 5,000+ members of the public and 1,000+ AI experts found:

- 73% of experts viewed AI as having a positive impact on how people do their jobs (vs. 23% of the public).

- 69% of experts viewed AI as beneficial to the economy (vs. 21% of the public). [7]

The Slack Workforce Index survey based on 17,000+ desk workers globally, found:

- 99% of executives are planning AI investments.

- 76% of knowledge workers feel urgency to become AI experts.

- Both groups agree that upskilling is a top priority.

- Some uncertainty persists among knowledge workers, suggesting a slowdown in adoption momentum. [8]

LinkedIn Job Trends: Anecdotal review of cybersecurity job listings on LinkedIn suggests that AI-related skills are not yet widely required. In a March 2024 posting for the EY Cyber Threat and Vulnerability Management (TVM) team, “Analytical and Critical Thinking” was listed as a desirable, but not required, qualification.

In March 2025, Wells Fargo had two postings for Senior Lead GenAI Literacy Consultants. Responsibilities included:

- Designing and executing GenAI literacy strategies.

- Leading organization-wide training on GenAI tools and concepts.

Requirements emphasized experience in promoting GenAI adoption through strategic education initiatives — signaling the emergence of a new internal role for AI enablement.

Forbes Reporting: In a Forbes interview, Aneesh Raman, LinkedIn’s Chief Economic Opportunity Officer, warned that AI will profoundly reshape knowledge work. As GenAI assumes many core tasks, success will increasingly depend on uniquely human capabilities.

Raman coined the phrase “soft skills are the new hard skills,” identifying the “five Cs” that define human value in an AI-driven workplace: Curiosity, Compassion, Creativity, Courage, and Communication. [9]

Additional reporting from the World Economic Forum and Forbes echoes the same trend:

- The most in-demand skills — critical thinking, emotional intelligence, and learning agility—are also the most human and hardest to teach.

- Employers are advised to “augment, don’t automate” — and to build a workforce capable of managing, not just using, AI systems. [10]

As one Forbes contributor wrote: “The future of work won’t be built on AI — it will be built on the people who know how to work with it.”

‘AI First’ Hiring: In contrast to the ‘augment, don’t automate’ ethos, some companies are adopting a more candid approach. CEOs such as Tobias Lütke (Shopify) and Micha Kaufman (Fiverr) have publicly acknowledged that AI is already reducing the need for new hires. Their internal memos urge employees to adopt AI tools now, warning that output expectations will rise even as headcount shrinks. This shift signals a new era of radical candor and transparency in AI-driven workforce transformation. [24]

GenAI Cyber Examples

While GenAI remains in the early stages of technical maturity, the cybersecurity domain is proving to be a fertile ground for innovation. Both established companies and emerging startups — including software vendors, managed services firms, and consultancies — are actively deploying GenAI to improve cybersecurity operations. [14–21]

In this section, we present a series of cybersecurity-focused use-cases developed using our own custom implementation: ThreatShare.ai Cyber Researcher, powered by OpenAI’s GPT-4o.

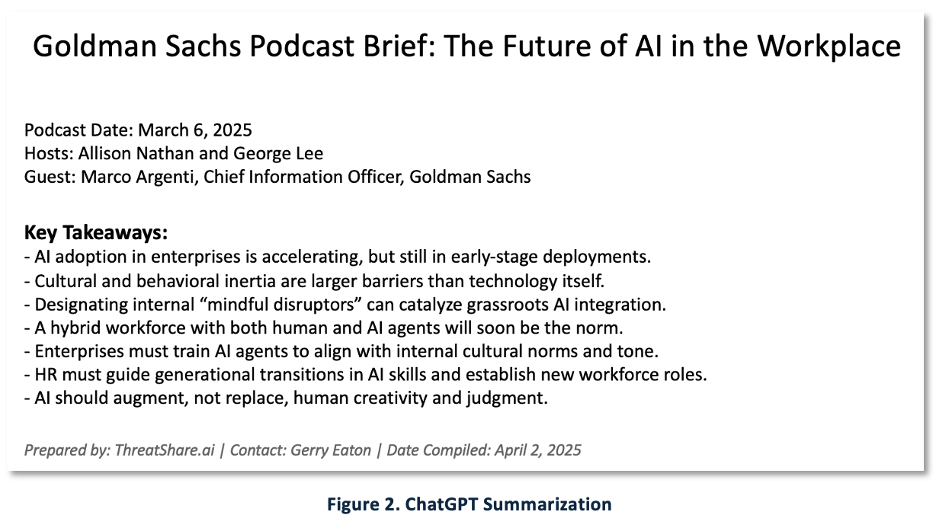

Use Case 1: Summarizing Long-Form Content

GenAI excels at summarizing unstructured documents — an essential task in security operations, training, and executive communication.

In Figure 2, we show a summary generated from a transcript of the Goldman Sachs Podcast on the future of AI in the workplace, which featured CIO Marco Argenti discussing the future of AI in the workplace. [22] The original document was 4,823 words across 18 pages. Using ChatGPT, we generated both a concise narrative summary and a one-page PowerPoint briefing slide. The only modification to the output was a minor font size adjustment to the slide’s title.

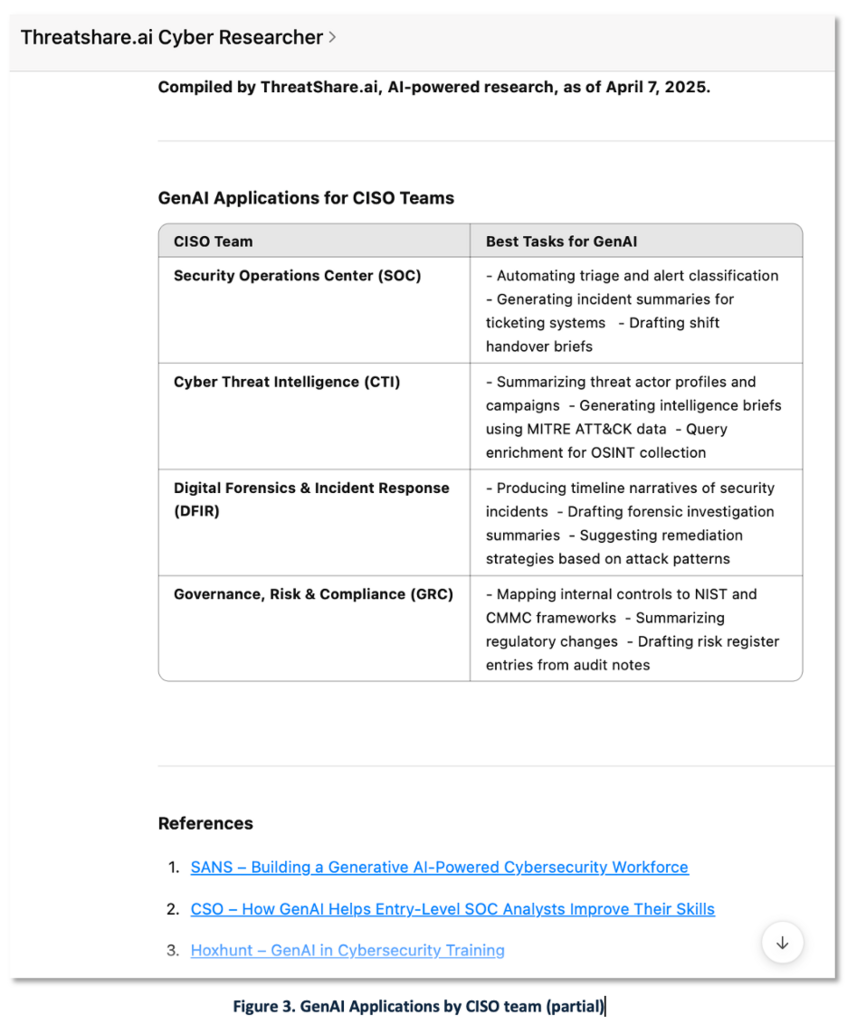

Use Case 2: Workforce Planning and Role-Based Recommendations

In Figure 3, we present a summary report developed for a hypothetical CISO leading cybersecurity operations at a large enterprise in the critical infrastructure sector.

The prompt requested a 2-column table listing:

- Four cybersecurity sub-teams

- Three recommended GenAI-related tasks per team

We defined the format in the user prompt and specified the header language and source citation references in our system prompts. The result delivers tailored, structured output to support CISO-level planning.

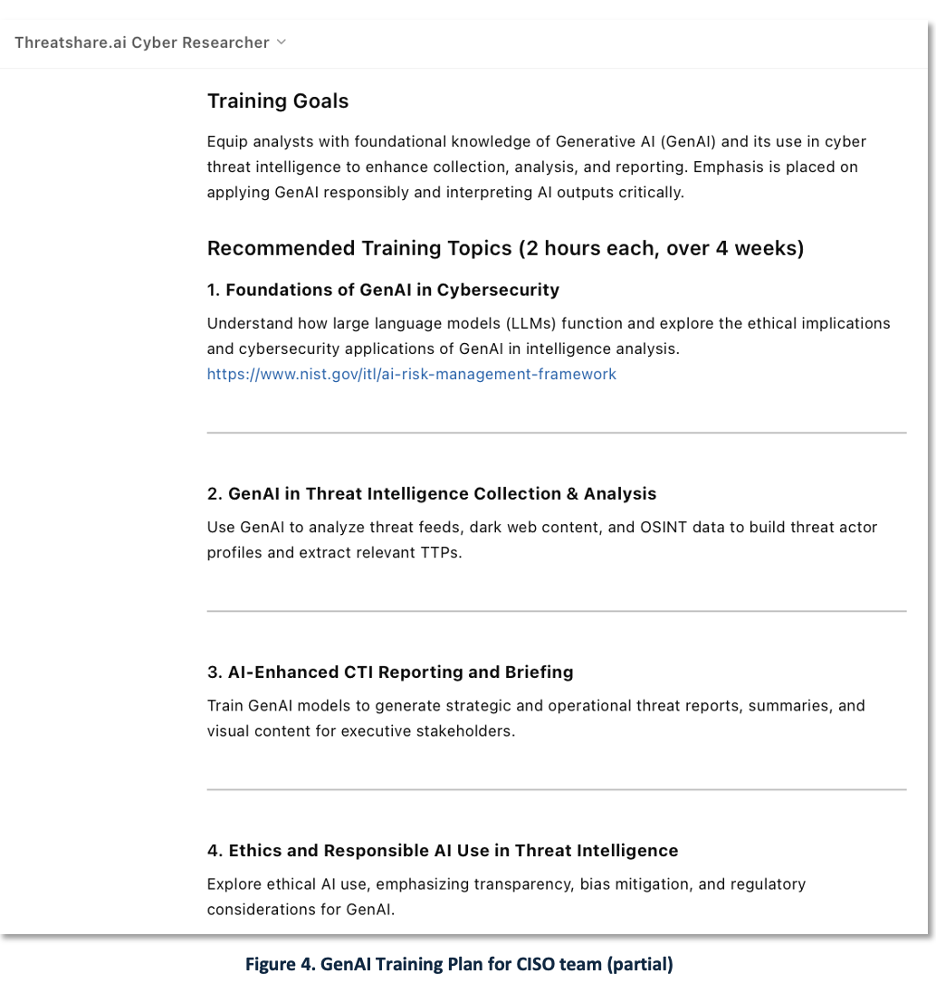

Use Case 3: Training Program Development

Continuing with the scenario, the CISO requested a structured recommendation for an eight-hour training plan, delivered over four weeks in two-hour sessions.

Figure 4 shows how the model responded to this request with clear and actionable session topics. The response reflects the model’s ability to adapt content generation to specific enterprise scheduling and skill-building needs.

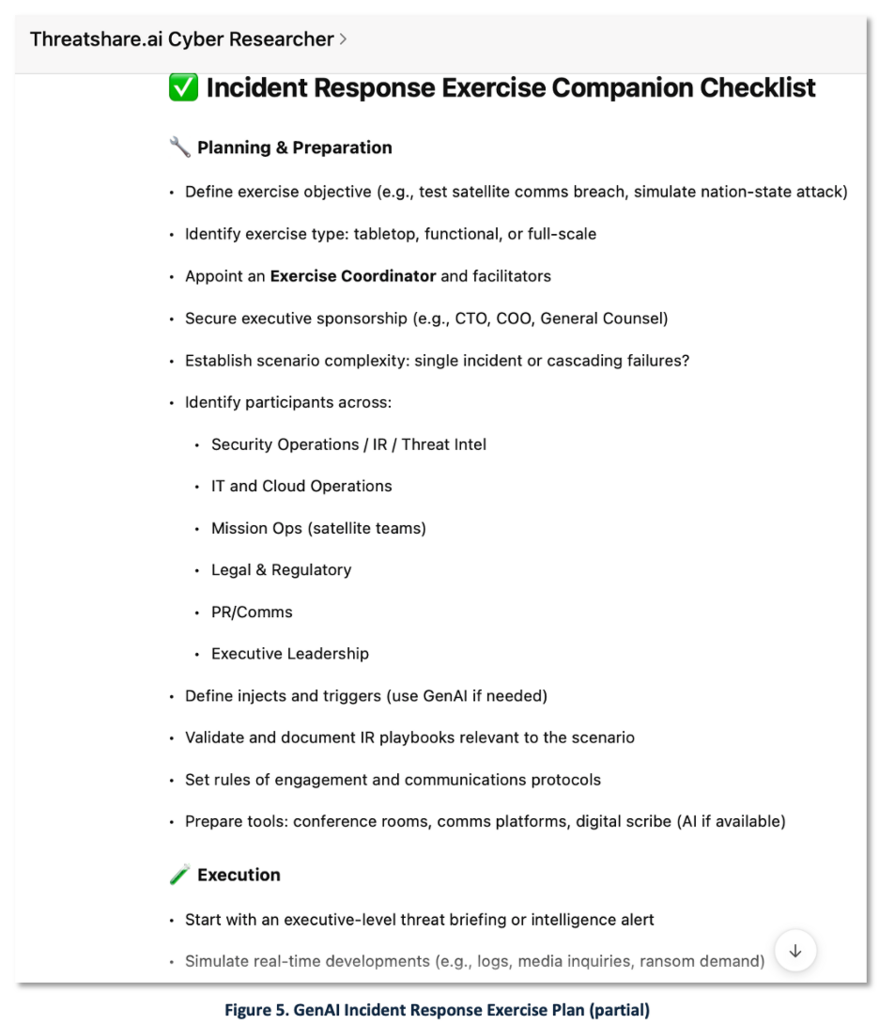

Use Case 4: Incident Response Exercise Planning

Finally, in Figure 5, we include a partial output from a GenAI-generated recommendation for updating an incident response exercise plan. This example shows how CISO teams can prompt GenAI to align exercises with evolving threat scenarios, internal processes, and compliance frameworks.

Outlook

As GenAI in cybersecurity matures from pilot experiments to production deployments, enterprise leaders must develop a realistic understanding of what adoption entails. This includes challenging marketing-driven narratives that promise “magic prompts” and claim prompt engineering is easy.

For example, a recent Forbes article — “5 Powerful Prompts That Can Boost Any Business Idea”—suggests that effective prompting is as simple as copying templates into ChatGPT. While this may suffice for consumer applications, Figure 6 highlights the risk of trivializing a discipline that, in professional domains like cybersecurity, demands domain knowledge, critical thinking, and operational judgment.

Effective prompt engineering is not merely about asking for outputs. It’s about knowing what to ask, how to structure the prompt, how to evaluate the result, and how to iterate—all in the context of organizational goals, data constraints, and risk.

Looking ahead, we believe that successful enterprise adoption will depend on building a continuous learning culture — one that supports the upskilling of cybersecurity teams over time. As with any transformational technology initiative, this will require sustained investments in systems, tools, consulting, and training services.

Editor’s Notes:

- Special thanks to our chatbot research assistants for their contributions to research, analysis and editorial review.

- The feature image for this blog post was generated by ChatGPT with human guidance.

References

- MIT Horizon – Critical Thinking in the Age of AI, 19-March-2025

- Yahoo Finance – AI is leading to the ‘revenge of the liberal arts,’ says a Goldman tech exec with a history degree , 17-May-2024

- Deloitte – GenAI impacts to the cybersecurity workforce that every CISO must consider, 21—Nov-2024

- Microsoft – The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers, 25-Jan-2025

- Societies – https://doi.org/10.3390/soc15010006: AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking, 3-Jan-2025

- World Economic Forum – Future of Jobs Report 2025 – Insight Report, January 2025

- Pew Research Center – How the U.S. Public and AI Experts View Artificial Intelligence , 3-April-2025

- Slack – The Fall 2024 Workforce Index Shows Executives and Employees Investing in AI, but Uncertainty Holding Back Adoption, 12-Nov-2024

- Forbes – The Great Skill Shift: How AI Is Transforming 70% Of Jobs By 2030, 4-April-2025

- Forbes – AI Is Not Your Talent Strategy, 5-April-2025

- Microsoft – Microsoft CTO breaks down how he sees software developer jobs evolving in the next 5 years, 3-April2-25

- Axios – Perplexity: Why AI fundraising has become a rat race , 19-May-2024

- Windows Central – Meta’s Chief AI scientist says “AI is not replacing people” — Humans will boss AI around, including superintelligence, 9-April-2025

- Check Point Software Technologies THE CYBER SECURITY PROFESSIONAL’S GUIDE TO PROMPT ENGINEERING, 24-April-2024

- CSO – How gen AI helps entry-level SOC analysts improve their skills , 5-March-2024

- Sophos – Beyond the hype: The business reality of AI for cybersecurity, 28-Jan-2025

- Hoxhunt – How Can Generative AI Be Used in Cybersecurity Training?, 14-March-2025

- SANS – Building a Generative AI-Powered Cybersecurity Workforce, 10-May-2024

- KPMG – How the CISO can help the business kickstart generative AI projects

- Deloitte – How can organizations engineer quality software in the age of generative AI?

- McKinsey & Company – The cybersecurity provider’s next opportunity: Making AI safe, 14-Nov-2024

- Goldman Sachs AI Exchanges Podcast, CIO Marco Argenti on the future of AI in the workplace , 6-March-2025

- NYTimes – Yes, A.I. Can Be Really Dumb. But It’s Still a Good Tutor. , 17-May-2024

- Fortune – Shopify is saying the quiet part out loud: AI will replace new hiring—other CEOs just won’t admit it , 10-April-2025

- Forbes – 5 Powerful AI Prompts That Can Boost Any Business Idea, 15-April-2025